taby said:

You had once before brought up the possibility of switching from using an array to using an SSBO.

First, some nitpicking on terminology…

When you use a local array allocated in a shader, unique to a single thread, you do not know it is an array at all. Because the thread has no local memory. It only has registers. And you can not index registers with an array index. So the compiler might need to do lots of branches to find the random register associated to the given index, which is why it's potentially slow.

Contrary, the SSBO is just VRAM. So it will be addressable by index in O(1) like an array.

The problem is ofc. that you need huge amounts of memory, e.g. one array for each pixel.

And VRAM is much slower than registers, even if cached.

If i have to guess, i assume using SSBO is not worth it, and it will end up even slower.

But i'm not sure at all. It's maybe no so much work to try it out.

But no matter what, you won't be able to make it fast enough this way.

What made realtime PT possible was mainly the progress on reducing sample counts, enabled by spatio temporal denoising with Quake II RTX, and later additionally with Restir. NVidias next step seems a neural radiance cache, which is ML where the training isn't offline but aims to improve based on current view and lighting conditions.

If you want path traced games seriously, no way around of diving into these complex topics. It's why current games doing this usually require only 1 sample per pixel. It's also why their results are blurry, good to see in current RTX Remix mods for old games.

If you just want to get rid of the array, likely you have to sacrifice the bidirectional method for those technical reasons (which might be needed anyway).

The truth is that PT conflicts with the way GPUs work, and accelerating the traceRay function is not enough to change this.

The other truth is that PT is inefficient by definition, even when ignoring current HW memory limitations. It is easy and flexible, so ideal for offline, but for realtime apps we want some form of caching to share work, instead redoing it independently for each pixel and frame again and again. But because any cache is a dsicretization, we must trade some accuracy for the win (which any form of spatio temporal denoising or sample reuse does anyway, just not with ideal efficiency.)

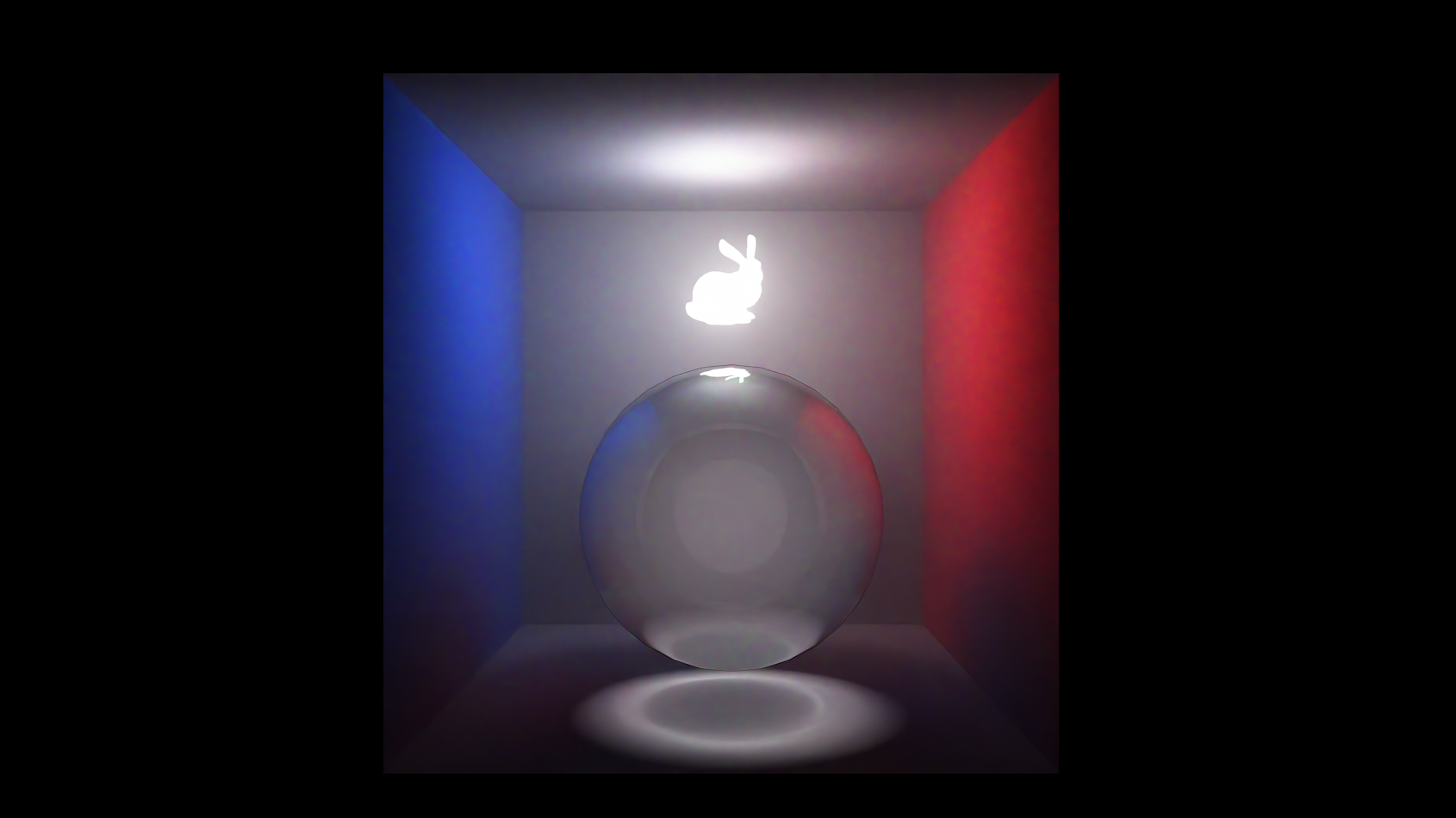

I can tell you what to do from looking into my crystal ball, telling me the future:

Don't work too hard on rendering.

Work on the game and render just boxes.

Then you enter this to the prompt each frame:

‘Generate a image which looks like real. Red boxes should become castles, and blue boxes become knights with swords. Make up the rest yourself.’

Done. \:D/

Well, i never know myself if i'm joking of if i'm serious, currently.

It really depends on what your crystal ball shows. ; )