frob said:

The way to improve it is to make a polygonal strip, something wide enough to fit your view frustum taking one pixel when projected out, and render that instead of a line strip. In many ways that type of triangle strip would be less work for the graphics system overall.

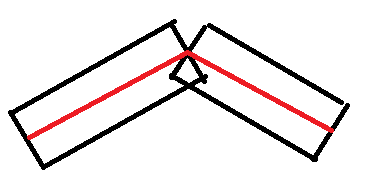

Afaik that's what the driver is doing already when drawing lines. The two vertices of a segment are duplicated and displaced so each segment forms a quad of given width using two triangles for each segment. Since GPUs can only draw triangles, but no points, lines or quads, the triangles must have some area. Otherwise pixel coverage would be always zero and no pixels would be drawn.

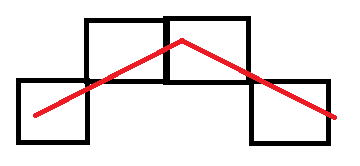

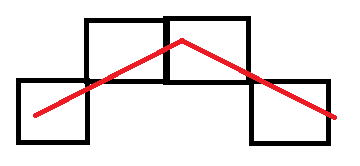

The conversion to quads can be observed by setting a large line width, iirc. It should look like this:

The black quads are generated from the given red line segments.

I don't think a custom implementation could do somethign against the tradeoff of small width: some missing pixels, and large width: some double pixels.

We could only improve the gap on the vertices. But that's not the problem, and eventually the gaps are better with AA on. (Similar to points, which become proper circles with AA on, but are only squares with AA off.)

Thus, if we want no duplicated pixels and no holes, the compute approach is simpler and more efficient. Trying to achieve this with triangles would require careful and complicated construction of geometry considering the pixel grid precisely.

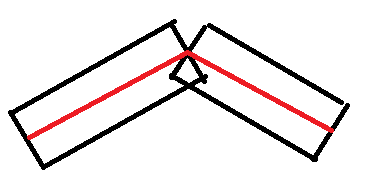

However, the current approach to generate only vertical lines actually is such approach. It should work as desired by turning above axample into this:

You may need to experiment with subpixel accuracy, e.g. moving all vertices by half a pixel, but after some experimentation it should work.

Otherwise i would accept some ‘artifacts’, or go back to the compute approach but making it efficient.